Fast Image-based Neural Relighting with Translucency-Reflection Modeling

TL;DR

Abstract

Image-based lighting (IBL) is a widely used technique that renders objects using a high dynamic range image or environment map. However, aggregating the irradiance at the object's surface is computationally expensive, in particular for non-opaque, translucent materials that require volumetric rendering techniques. In this paper we present a fast neural 3D reconstruction and relighting model that extends volumetric implicit models such as neural radiance fields to be relightable using IBL. It is general enough to handle materials that exhibit complex light transport effects, such as translucency and glossy reflections from detailed surface geometry. The model makes no simplifying assumptions for the light transport, hence produces realistic and compelling results. Rendering can be within a second at 800 x 800 resolution (0.72s on an NVIDIA 3090 GPU and 0.30s on an A100 GPU) without engineering optimization.

Components

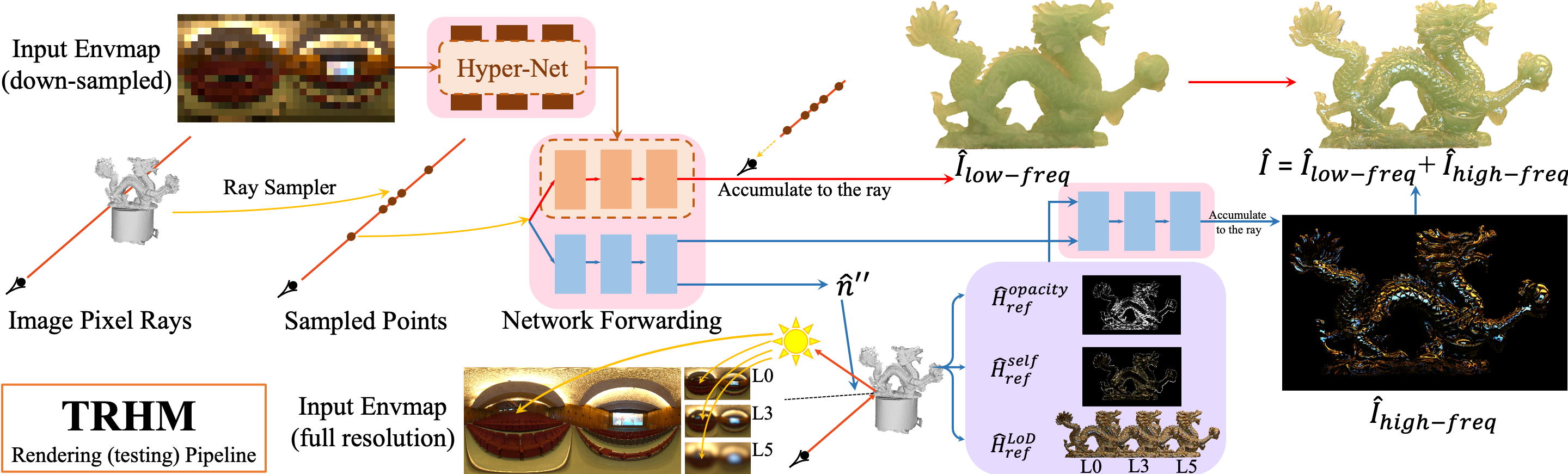

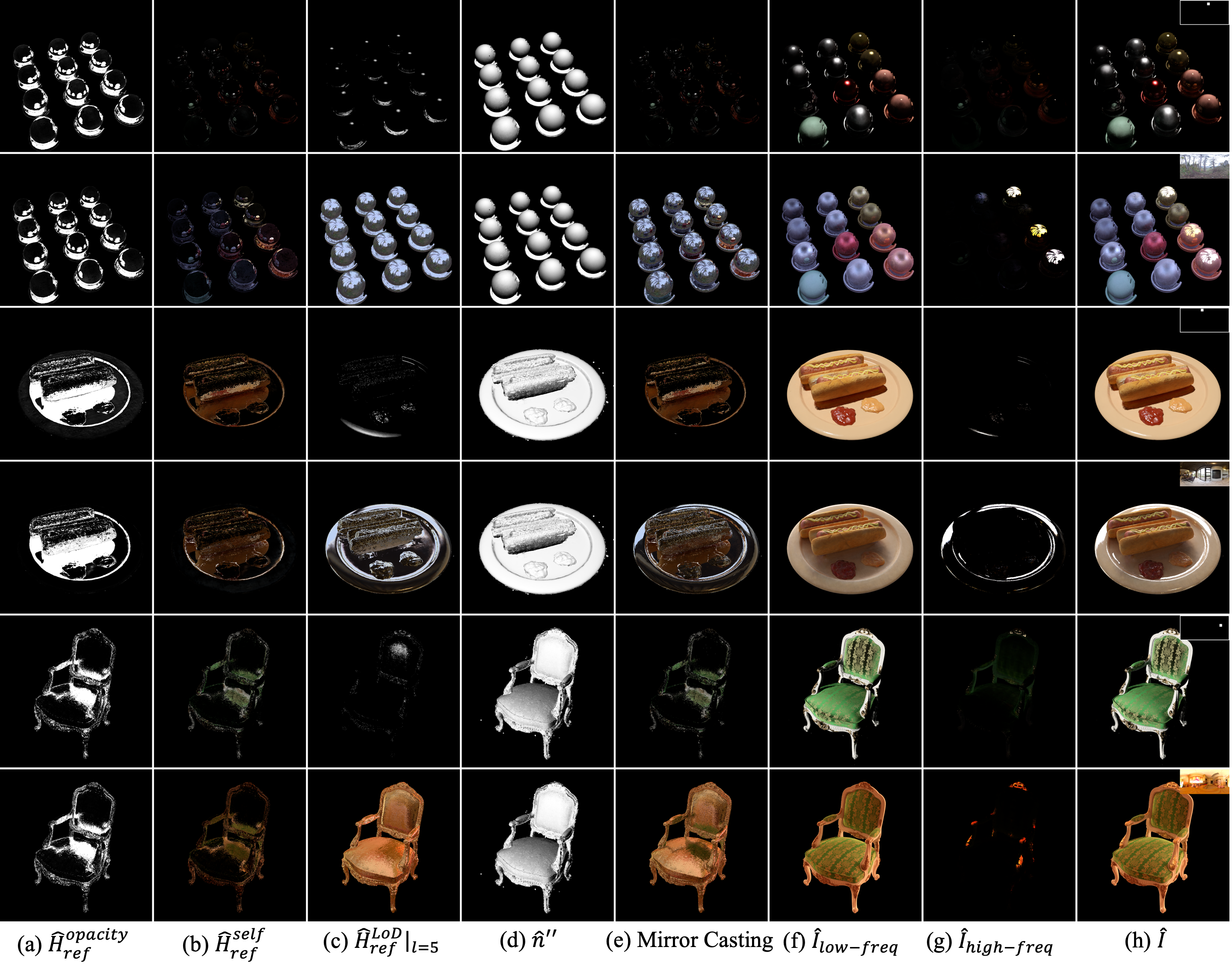

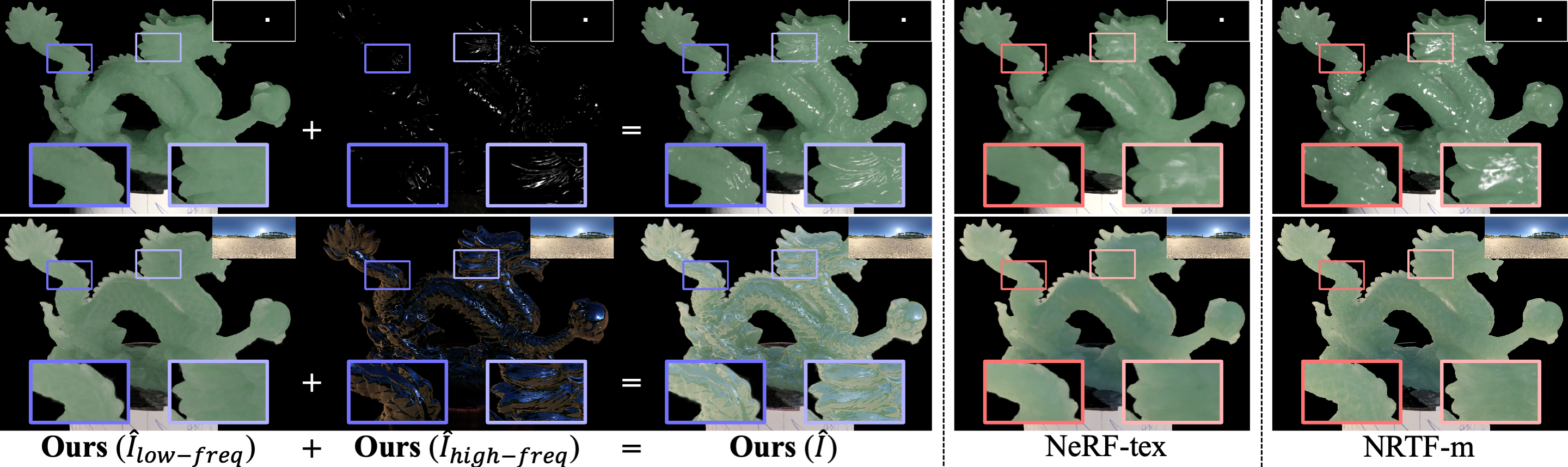

Our optimization and rendering modeling consists of a low-frequency branch and a high-frequency branch, enabling the fast rendering of the translucency and specular highlights lighting effects, as well as image-based relighting optimized from OLAT data only.

On Synthetic Data

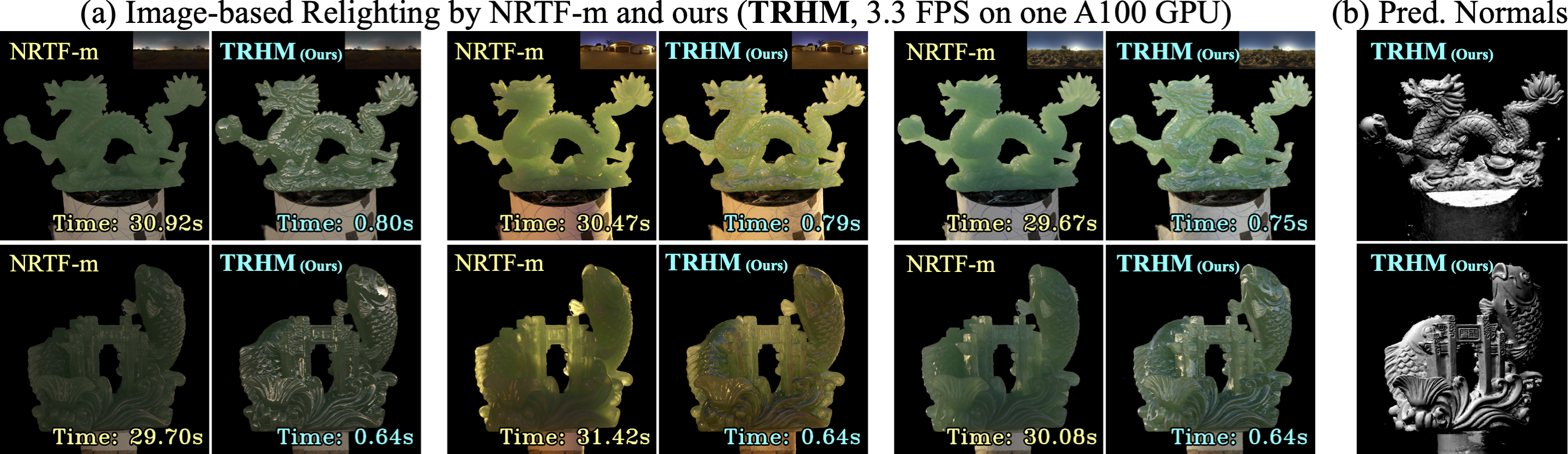

On Real Light Stage Data

Compared with NeRF-tex (NeRF plus three OLAT dimension as inputs) and NRTF-m (optimized from the OLAT setting similar to ours), our prediction over the highlight pixels demonstrates higher quality, both quantitatively (OLAT) and qualitatively (OLAT + envmap).

Demo Videos

Fast rendering of our low-frequency (left), high frequency (middle, in the HDR scaling) components as well as our final prediction (right).

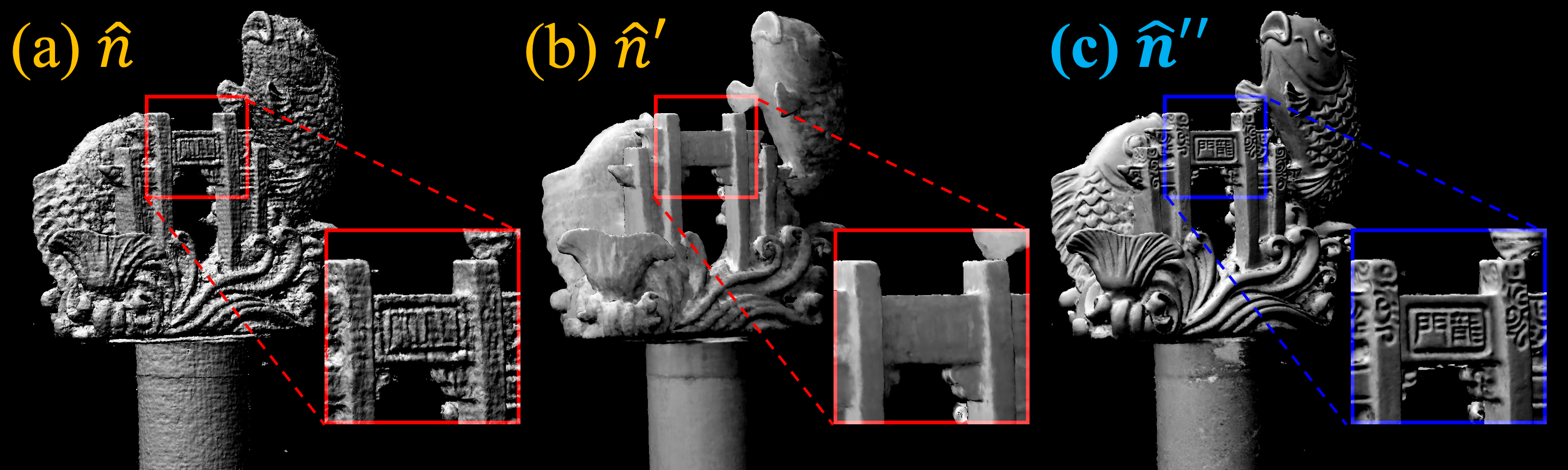

Normals for Local Modeling

Our predicted normals for reflection purposes (c) capture better fidelity of local modeling compared to the gradient of the raw density (a) and the Ref-NeRF normal that is designed to be smoothed (b).