Differentiable Gradient Sampling for Learning Implicit 3D Scene Reconstructions from a Single Image

Shizhan Zhu, Sayna Ebrahimi, Angjoo Kanazawa, Trevor Darrell

ICLR 2022

Abstract

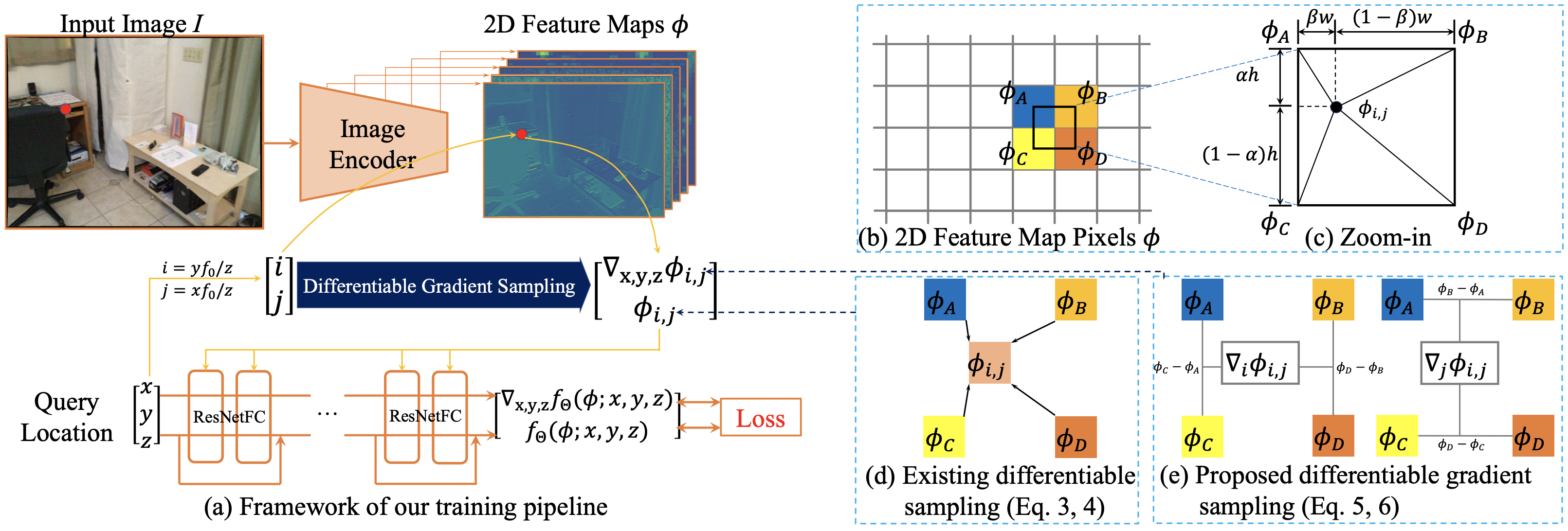

Implicit neural shape functions, e.g. occupancy fields or signed distance functions (SDF), are promising 3D representations for modeling clean mesh surfaces. Existing approaches that use these representations for single-view object reconstruction require supervision signals from every location in the scene, posing difficulties when extending to real-world scenarios where ideal watertight geometric training data is difficult to obtain. In such cases, constraints on the spatial gradient of the implicit field, rather than the value itself, can provide a training signal, but this has not been employed as a source of supervision for single-view reconstruction in part due to the difficulties of differentiably sampling a spatial gradient from a feature map. In this paper, we derive a novel closed-form Differentiable Gradient Sampling (DGS) solution that enables backpropagation of the loss on spatial gradients to the feature maps, thus allowing training on large-scale scenes without dense 3D supervision. As a result, we demonstrate single view implicit surface reconstructions on real-world scenes via learning directly from a scanned dataset. Our model performs well when generalizing to unseen images from Pix3D or downloaded directly from the internet (Fig. 1). Extensive quantitative analysis confirms that our proposed DGS module plays an essential role in our learning framework. Full codes are available in https://github.com/zhusz/ICLR22-DGS.

Framework

Video

Citation

@inproceedings{zhu2021differentiable,

title={Differentiable Gradient Sampling for Learning Implicit 3D Scene Reconstructions from a Single Image},

author={Zhu, Shizhan and Ebrahimi, Sayna and Kanazawa, Angjoo and Darrell, Trevor},

booktitle={International Conference on Learning Representations},

year={2021}

}